http://www.wired.co.uk/news/archive/2015-07/27/musk-hawking-ai-arms-race

A global robotic arms race "is virtually inevitable" unless a ban is imposed on autonomous weapons, Stephen Hawking, Elon Musk and 1,000 academics, researchers and public figures have warned.

In an open letter presented at the International Joint Conference on Artificial Intelligence in Buenos Aries, the Future of Life Institute signatories caution that "starting a military AI arms race is a bad idea, and should be prevented by a ban on offensive autonomous weapons beyond meaningful human control".

Although the letter, first reported by the Guardian, notes that "we believe that AI has great potential to benefit humanity in many ways, and that the goal of the field should be to do so", it concludes that "this technological trajectory is obvious: autonomous weapons will become the Kalashnikovs of tomorrow".

Joining Professor Hawking and SpaceX founder Elon Musk below the letter are Steve Wozniak, cofounder of Apple, linguist Noam Chomsky, cofounder of Sky Jaan Tallinn and Stephen Goose, director of Human Rights Watch's arms division.

The UK says it is not developing lethal AI, but the potential to build such weapons already exists and is developing fast -- a recent report into the future of warfare commissioned by the US military predicts "swarms of robots" will be ubiquitous by 2050. In response, experts and high-profile figures like Musk have made repeated calls to limit the development of deadly AI, even as peaceful autonomy grows more central to virtually every other area of tech and industry. The Future of Life Institute announced in June it would use a $10m donation from Elon Musk to fund 37 projects aimed at keeping AI "beneficial", with $1.5m dedicated to a new research centre in the UK run by Oxford and Cambridge universities.

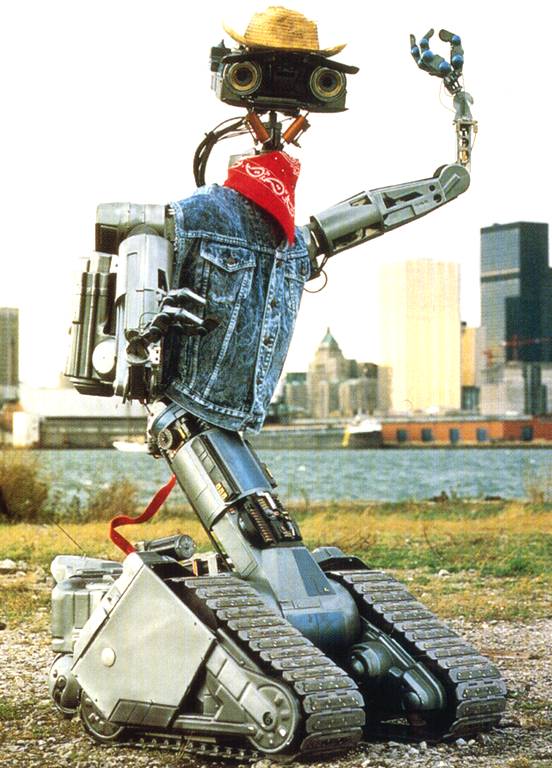

The latest letter starts by defining autonomous weapons as those which "select and engage targets without human intervention", including quadcopters able to search for and kill people, but not remotely piloted missiles or drones. It also lists the arguments usually made in favour of such machines -- such as reducing casualties among soldiers.

A global robotic arms race "is virtually inevitable" unless a ban is imposed on autonomous weapons, Stephen Hawking, Elon Musk and 1,000 academics, researchers and public figures have warned.

In an open letter presented at the International Joint Conference on Artificial Intelligence in Buenos Aries, the Future of Life Institute signatories caution that "starting a military AI arms race is a bad idea, and should be prevented by a ban on offensive autonomous weapons beyond meaningful human control".

Although the letter, first reported by the Guardian, notes that "we believe that AI has great potential to benefit humanity in many ways, and that the goal of the field should be to do so", it concludes that "this technological trajectory is obvious: autonomous weapons will become the Kalashnikovs of tomorrow".

Joining Professor Hawking and SpaceX founder Elon Musk below the letter are Steve Wozniak, cofounder of Apple, linguist Noam Chomsky, cofounder of Sky Jaan Tallinn and Stephen Goose, director of Human Rights Watch's arms division.

The UK says it is not developing lethal AI, but the potential to build such weapons already exists and is developing fast -- a recent report into the future of warfare commissioned by the US military predicts "swarms of robots" will be ubiquitous by 2050. In response, experts and high-profile figures like Musk have made repeated calls to limit the development of deadly AI, even as peaceful autonomy grows more central to virtually every other area of tech and industry. The Future of Life Institute announced in June it would use a $10m donation from Elon Musk to fund 37 projects aimed at keeping AI "beneficial", with $1.5m dedicated to a new research centre in the UK run by Oxford and Cambridge universities.

The latest letter starts by defining autonomous weapons as those which "select and engage targets without human intervention", including quadcopters able to search for and kill people, but not remotely piloted missiles or drones. It also lists the arguments usually made in favour of such machines -- such as reducing casualties among soldiers.